Power Transformer Vs Standardscaler . There are two options for. The scaling shrinks the range of the feature values as shown in. what’s the difference between normalization and standardization? in my machine learning journey, more often than not, i have found that feature preprocessing is a more effective technique in improving my evaluation metric than any other step, like choosing a model algorithm, hyperparameter tuning, etc. Normalization changes the range of a. Feature transformation and scaling is one of the most crucial steps in building a machine learning model. It attempts optimal scaling to stabilize variance and minimize skewness through maximum likelihood estimation. standardscaler removes the mean and scales the data to unit variance. from sklearn.preprocessing import standardscaler, robustscaler, quantiletransformer, powertransformer. Next, we will instantiate each. Power transformer tries to scale the data like gaussian.

from www.iqsdirectory.com

what’s the difference between normalization and standardization? in my machine learning journey, more often than not, i have found that feature preprocessing is a more effective technique in improving my evaluation metric than any other step, like choosing a model algorithm, hyperparameter tuning, etc. The scaling shrinks the range of the feature values as shown in. Feature transformation and scaling is one of the most crucial steps in building a machine learning model. Power transformer tries to scale the data like gaussian. from sklearn.preprocessing import standardscaler, robustscaler, quantiletransformer, powertransformer. Normalization changes the range of a. There are two options for. standardscaler removes the mean and scales the data to unit variance. Next, we will instantiate each.

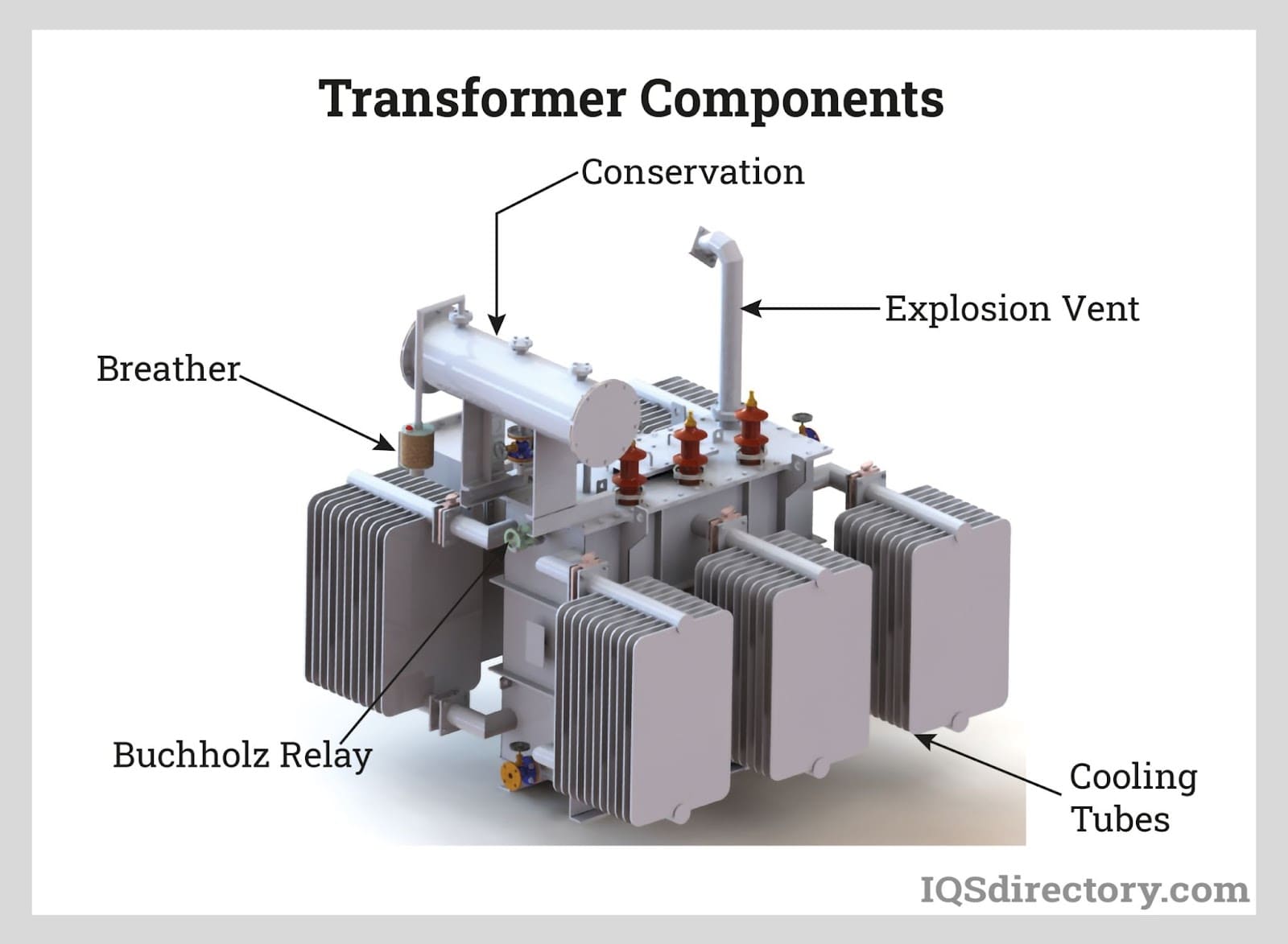

Power Transformers Types, Uses, Features and Benefits

Power Transformer Vs Standardscaler The scaling shrinks the range of the feature values as shown in. It attempts optimal scaling to stabilize variance and minimize skewness through maximum likelihood estimation. The scaling shrinks the range of the feature values as shown in. Next, we will instantiate each. what’s the difference between normalization and standardization? Normalization changes the range of a. from sklearn.preprocessing import standardscaler, robustscaler, quantiletransformer, powertransformer. in my machine learning journey, more often than not, i have found that feature preprocessing is a more effective technique in improving my evaluation metric than any other step, like choosing a model algorithm, hyperparameter tuning, etc. Feature transformation and scaling is one of the most crucial steps in building a machine learning model. Power transformer tries to scale the data like gaussian. There are two options for. standardscaler removes the mean and scales the data to unit variance.

From www.ztelecgroup.com

Power,Transformers,Distribution,very,important,part,the, Power Transformer Vs Standardscaler Next, we will instantiate each. The scaling shrinks the range of the feature values as shown in. Feature transformation and scaling is one of the most crucial steps in building a machine learning model. Normalization changes the range of a. There are two options for. from sklearn.preprocessing import standardscaler, robustscaler, quantiletransformer, powertransformer. It attempts optimal scaling to stabilize variance. Power Transformer Vs Standardscaler.

From tikweld.com

How Electrical Transformers Work A Simplified Guide Tikweld products Power Transformer Vs Standardscaler from sklearn.preprocessing import standardscaler, robustscaler, quantiletransformer, powertransformer. Normalization changes the range of a. Power transformer tries to scale the data like gaussian. in my machine learning journey, more often than not, i have found that feature preprocessing is a more effective technique in improving my evaluation metric than any other step, like choosing a model algorithm, hyperparameter tuning,. Power Transformer Vs Standardscaler.

From machinelearningmastery.com

How to Use StandardScaler and MinMaxScaler Transforms in Python Power Transformer Vs Standardscaler what’s the difference between normalization and standardization? The scaling shrinks the range of the feature values as shown in. Normalization changes the range of a. It attempts optimal scaling to stabilize variance and minimize skewness through maximum likelihood estimation. from sklearn.preprocessing import standardscaler, robustscaler, quantiletransformer, powertransformer. Power transformer tries to scale the data like gaussian. Next, we will. Power Transformer Vs Standardscaler.

From metapowersolutions.com

Differences Between Power Transformers And Distribution Transformers Power Transformer Vs Standardscaler what’s the difference between normalization and standardization? Normalization changes the range of a. The scaling shrinks the range of the feature values as shown in. Next, we will instantiate each. There are two options for. Feature transformation and scaling is one of the most crucial steps in building a machine learning model. It attempts optimal scaling to stabilize variance. Power Transformer Vs Standardscaler.

From askanydifference.com

Power vs Distribution Transformer Difference and Comparison Power Transformer Vs Standardscaler standardscaler removes the mean and scales the data to unit variance. from sklearn.preprocessing import standardscaler, robustscaler, quantiletransformer, powertransformer. in my machine learning journey, more often than not, i have found that feature preprocessing is a more effective technique in improving my evaluation metric than any other step, like choosing a model algorithm, hyperparameter tuning, etc. It attempts. Power Transformer Vs Standardscaler.

From www.easybom.com

The Difference between Power Transformers and Distribution Transformers Power Transformer Vs Standardscaler Normalization changes the range of a. There are two options for. Feature transformation and scaling is one of the most crucial steps in building a machine learning model. The scaling shrinks the range of the feature values as shown in. in my machine learning journey, more often than not, i have found that feature preprocessing is a more effective. Power Transformer Vs Standardscaler.

From www.electricaltechnology.org

Difference between Power Transformer & Distribution Transformer Power Transformer Vs Standardscaler Power transformer tries to scale the data like gaussian. Normalization changes the range of a. Feature transformation and scaling is one of the most crucial steps in building a machine learning model. standardscaler removes the mean and scales the data to unit variance. The scaling shrinks the range of the feature values as shown in. from sklearn.preprocessing import. Power Transformer Vs Standardscaler.

From www.electricaltechnology.org

Difference between Power Transformer & Distribution Transformer Power Transformer Vs Standardscaler in my machine learning journey, more often than not, i have found that feature preprocessing is a more effective technique in improving my evaluation metric than any other step, like choosing a model algorithm, hyperparameter tuning, etc. The scaling shrinks the range of the feature values as shown in. There are two options for. what’s the difference between. Power Transformer Vs Standardscaler.

From in.pinterest.com

Pin on Electricalscope Power Transformer Vs Standardscaler Next, we will instantiate each. standardscaler removes the mean and scales the data to unit variance. Feature transformation and scaling is one of the most crucial steps in building a machine learning model. from sklearn.preprocessing import standardscaler, robustscaler, quantiletransformer, powertransformer. There are two options for. Normalization changes the range of a. what’s the difference between normalization and. Power Transformer Vs Standardscaler.

From www.youtube.com

POWER TRANSFORMER AND DISTRIBUTION TRANSFORMER! DIFFERENCE BETWEEN Power Transformer Vs Standardscaler what’s the difference between normalization and standardization? Feature transformation and scaling is one of the most crucial steps in building a machine learning model. It attempts optimal scaling to stabilize variance and minimize skewness through maximum likelihood estimation. There are two options for. Next, we will instantiate each. Normalization changes the range of a. Power transformer tries to scale. Power Transformer Vs Standardscaler.

From www.electricalvolt.com

power transformer vs distribution transformer Archives Electrical Volt Power Transformer Vs Standardscaler Next, we will instantiate each. Feature transformation and scaling is one of the most crucial steps in building a machine learning model. in my machine learning journey, more often than not, i have found that feature preprocessing is a more effective technique in improving my evaluation metric than any other step, like choosing a model algorithm, hyperparameter tuning, etc.. Power Transformer Vs Standardscaler.

From www.youtube.com

DIFFERENCE BETWEEN POWER TRANSFORMER AND DISTRIBUTION TRANSFORMER YouTube Power Transformer Vs Standardscaler It attempts optimal scaling to stabilize variance and minimize skewness through maximum likelihood estimation. Normalization changes the range of a. Next, we will instantiate each. what’s the difference between normalization and standardization? The scaling shrinks the range of the feature values as shown in. Power transformer tries to scale the data like gaussian. Feature transformation and scaling is one. Power Transformer Vs Standardscaler.

From www.youtube.com

POWER TRANSFORMER Vs DISTRIBUTION TRANSFORMER। DIFFERENCE BETWEEN PTR Power Transformer Vs Standardscaler what’s the difference between normalization and standardization? standardscaler removes the mean and scales the data to unit variance. There are two options for. in my machine learning journey, more often than not, i have found that feature preprocessing is a more effective technique in improving my evaluation metric than any other step, like choosing a model algorithm,. Power Transformer Vs Standardscaler.

From www.youtube.com

Power Transformer vs Distribution Transformer YouTube Power Transformer Vs Standardscaler Normalization changes the range of a. in my machine learning journey, more often than not, i have found that feature preprocessing is a more effective technique in improving my evaluation metric than any other step, like choosing a model algorithm, hyperparameter tuning, etc. from sklearn.preprocessing import standardscaler, robustscaler, quantiletransformer, powertransformer. It attempts optimal scaling to stabilize variance and. Power Transformer Vs Standardscaler.

From lambdageeks.com

Power Transformer Vs Voltage Transformer Comparative Analysis And Power Transformer Vs Standardscaler Next, we will instantiate each. The scaling shrinks the range of the feature values as shown in. from sklearn.preprocessing import standardscaler, robustscaler, quantiletransformer, powertransformer. Normalization changes the range of a. There are two options for. Power transformer tries to scale the data like gaussian. standardscaler removes the mean and scales the data to unit variance. It attempts optimal. Power Transformer Vs Standardscaler.

From techiescience.com

Power Transformer vs Voltage Transformer A Comprehensive Guide Power Transformer Vs Standardscaler It attempts optimal scaling to stabilize variance and minimize skewness through maximum likelihood estimation. in my machine learning journey, more often than not, i have found that feature preprocessing is a more effective technique in improving my evaluation metric than any other step, like choosing a model algorithm, hyperparameter tuning, etc. Normalization changes the range of a. Next, we. Power Transformer Vs Standardscaler.

From www.theengineerspost.com

16 Different Types of Transformers and Their Working [PDF] Power Transformer Vs Standardscaler Power transformer tries to scale the data like gaussian. The scaling shrinks the range of the feature values as shown in. Normalization changes the range of a. from sklearn.preprocessing import standardscaler, robustscaler, quantiletransformer, powertransformer. Feature transformation and scaling is one of the most crucial steps in building a machine learning model. what’s the difference between normalization and standardization?. Power Transformer Vs Standardscaler.

From www.pinterest.com

Power Transformer vs Distribution Transformer Transformers, Current Power Transformer Vs Standardscaler Feature transformation and scaling is one of the most crucial steps in building a machine learning model. Power transformer tries to scale the data like gaussian. It attempts optimal scaling to stabilize variance and minimize skewness through maximum likelihood estimation. Next, we will instantiate each. in my machine learning journey, more often than not, i have found that feature. Power Transformer Vs Standardscaler.